How OnlyFans Piracy Is Ruining the Internet for Everyone

The internet is becoming harder to use because of unintended consequences in the battle between adult content creators who are trying to protect their livelihoods and the people who pirate their content.

Porn piracy, like all forms of content piracy, has existed for as long as the internet. But as more individual creators who make their living on services like OnlyFans, many of them have hired companies to send Digital Millennium Copyright Act takedown notices against companies that steal their content. As some of those services turn to automation in order to handle the workload, completely unrelated content is getting flagged as violating their copyrights and is being deindexed from Google search. The process exposes bigger problems with how copyright violations are handled on the internet, with automated systems filing takedown requests that are reviewed by other automated systems, leading to unintended consequences.

These errors show another way in which automation without human review is making the internet as we know it increasingly unusable. They also highlight the untenable piracy problem for adult content creators, who have little recourse to stop their paid content from being redistributed all over the internet.

I first noticed how bad some of these DMCA takedown requests are because one of them targeted 404 Media. I was searching Google for an article Sam wrote about Instagram’s AI therapists. I Googled “AI therapists 404 Media,” and was surprised it didn’t pop up because I knew we had covered the subject. Then I saw a note from Google at the bottom of the page noting Google had removed some search results “In response to multiple complaints we received under the US Digital Millennium Copyright Act.”

The notice linked to the Lumen Database, which keeps a record of DMCA complaints, who filed them, and for what. According to the Lumen Database, the complaint was filed by a company called Takedowns AI on behalf of content creator Marie Temara. Takedowns AI is one of many companies that help content creators, especially adult content creators, to scan the internet for images and videos they posted behind paywalls on platforms like OnlyFans and posted elsewhere for free. These companies also file DMCA takedown requests and navigate the copyright systems of big platforms like YouTube, Instagram, and Reddit. One of the most effective ways of preventing people from finding this pirated content is sending DMCA takedown requests to Google asking the search engine to delist results to sites that share it. As its name implies, Takedowns AI heavily relies on automation to do this work.

The complaint that impacted 404 Media included a list of 68 links to different websites that allegedly violated Temara’s copyright on content she posted to Instagram, OnlyFans, and other platforms. This was the allegedly offending link on 404 Media, which is a collage Sam made for her AI Facebook therapists story.

The collage includes Meta's AI-generated profile pictures of three of these AI therapists. The story itself has nothing to do with Temara, and the profile pictures look nothing like her. In fact, it would be hard for anyone to claim copyright for that image because in 2023 a U.S. court ruled that AI-generated art can’t be copyrighted.

I went through every other link in the same complaint and couldn’t find even one link that looked like it violated Temara’s copyrights. There were images from Grand Theft Auto V, famous baseball players, robots, stock images of people at theme parks, and movie posters, none of which looked remotely similar to Temara. In addition to 404 Media’s article about AI therapists, some of the pages that Google removed from search results due to this complaint included tech site wccftech.com, horror movie site bloody-disgusting.com, and rugby and wrestling sites.

These links are also just part of one complaint out of hundreds that Takedowns AI files every day. I looked through dozens of complaints to Google filed by Takedowns AI that were archived by the Lumen Database. The vast majority of them appear to be legitimate, but I did find other egregious mistakes. One of the worst mistakes I saw was a takedown request filed on behalf of a creator who goes by “honeyybee” against an article about actual honey bees on the University of Missouri’s website. The takedown request clearly targeted the article and caused Google to remove it from search results just because it was about a subject with a similar name to that of Takedown AI’s client.

Temara and honeyybee did not respond to a request for comment.

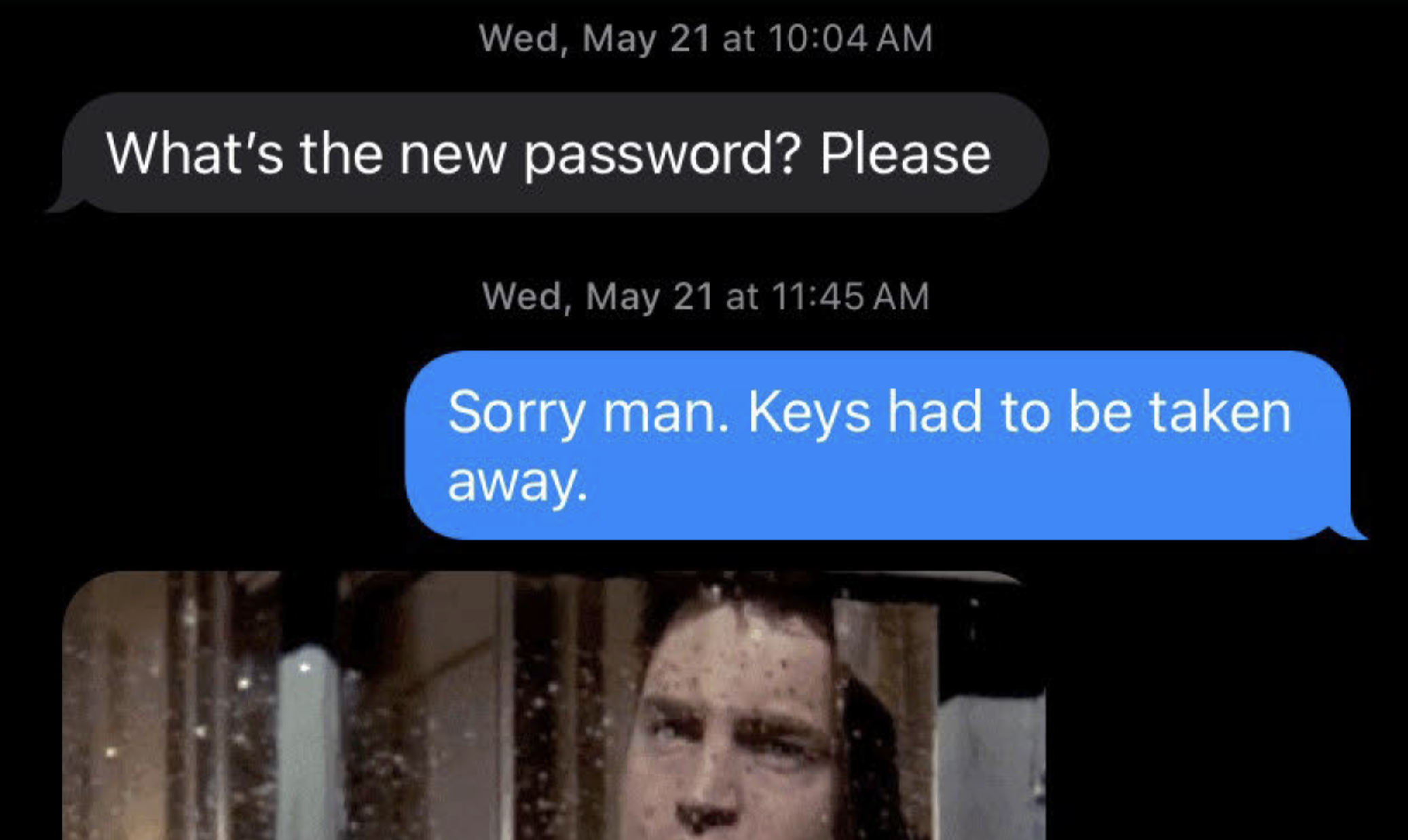

Takedowns AI CEO Kunal Anand told me that the company has filed 12 million takedowns requests to Google since 2022. He said that Takedowns AI uses facial recognition, keyword searches, and human reviewers to find and take down copyrighted content, and said he was overall confident in the company’s accuracy. Anand told me that sometimes his clients use Google Search’s API to see what results come up when they search for themselves, then ask Takedowns AI to remove everything on that list as is, which is what he thinks might have happened with Temara and honeyybee.

“We don't really review it [the list] because we are an agent for them,” Ananad said. “For the requests that we send out ourselves, usually they get reviewed, but sometimes they [clients] do a search by themselves, and they come across some content and they flag it and they're like, ‘We want this taken down.’ We don't review that because that is something that they want taken down. I'm not particularly sure about this case, but that is what happens. What we planned on doing was also reviewing these but it's usually not very fruitful, because the user is very sure they want that claim. And even if we say, ‘Hey, we don't think you should do that,’ they're like, ‘We want to do it. Just do it because I'm paying you for this.’ And if we just say, do it yourself, that kind of takes away the business from us. So that is basically how it works.”

Yvette van Bekkum, the CEO of Cam Model Protection, a company that’s offering the same services as Takedowns AI but that has been in business since 2014, told me that her company does not process requests like Anand described for clients. Cam Model Protection also uses AI, reverse image searches, and keyword searches to find infringing material, but it has systems in place to prevent false positives, Bekkum told me. These include a database of “whitelisted” content that it shouldn’t file takedown requests against, and human verification that each link the company sends to Google actually points to infringing content.

“Just a news article is not a copyright infringement,” Bekkum told me. “If there is no content being used or only a name being named, I don't need to explain to you that it's not in violation. Everybody can make a mistake, of course, but if you just randomly gather [links] and then report it, if it's not grounded on an infringement, you should not report it, of course.”

Bekkum and Ananad both said they understand why creators don’t want to click on every link that might be infringing on their copyright. It’s not only too much work—that’s why companies like Cam Model Protection exist in the first place—it also requires sifting through a sea of pornography they don’t want to see.

“This process is so time consuming,” Bekkum said. “And they do not want to focus on all that negative energy in Googling their name and seeing pages and pages full of links leading to illegal content.”

Elaina St. James, an adult content creator, told me she used a copyright takedown service and that it was most helpful when she flagged offending sites herself. St. James said she used the service to take down pirated content as well as catfishing accounts using her images, a problem 404 Media previously talked to her about. Overall, St. James said these services are useful but imperfect.

“I think they [DMCA takedown request companies] should stop overpromising,” she told me in an email. “There are some platforms—TikTok in particular—that do not comply. Tube sites in foreign countries also rarely comply.”

Automation of DMCA takedown requests has existed for years and has always resulted in some errors. Similar problems have also plagued YouTube’s automated Content ID system for years. More sites are likely to get caught in the crossfire as more content creators strike out on their own and turn to these services in an attempt to protect their income.

It’s an issue at the intersection of several critical problems with the modern internet: Google’s search monopoly, rampant porn piracy, a DMCA takedown process vulnerable to errors and abuse, and now the automation of all of the above in order to operate at scale. No one I talked to for this story thought there was an easy solution to this problem.

“It's all science fiction, but in the dumbest possible way,” Meredith Rose, a senior policy counsel with Public Knowledge who focuses on copyright, DMCA, and intellectual property reform, told me. “At the end of the day, the DMCA takedown provisions are a way to get speech off the internet. That's a very powerful tool. Even if you're not outright malicious, if somebody says something nasty about you and you want to keep your name out of their mouth, the DMCA kind of lets you do it without anybody checking your work. And so it is this really interesting case study in when you build these tools that give the power to anybody, even people who might not be who they say they are in these applications to get stuff taken down. Abuse happens. Sometimes it happens at scale. It happens for all different kinds of reasons. Sometimes it's just malice, sometimes it's incompetence, sometimes it's buggy automation [...] I feel like with AI, we're going to see a lot more of this.”

Anand said he believes the responsibility is with creators.

“The best way to solve that is to educate the creators more that this is not their content,” he told me. “A lot of creators are very scared, and what they want is everything about them taken down from everywhere. And then they start getting more aggressive with their takedowns.”

A spokesperson for Google told me that the vast majority of DMCA removals come from reporters who have a track record of valid takedowns, and that its DMCA removals process aims to find a balance between making it easy and efficient for rightsholders to report infringing content while also protecting free expression on the web.

“We actively fight fraudulent takedown attempts by using a combination of automated and human review to detect signals of abuse,” the Google spokesperson said. “We provide extensive transparency about these removals to hold requesters accountable, and sites can file counter notices if they believe a removal was made in error.”