This Stunning Image of the Sun Could Unlock Mysterious Physics

Welcome back to the Abstract! What an extreme week it has been in science. We’ve got extreme adaptations and observations to spare today, so get ready for a visually spectacular tour of deep seas, deep time, and deep space.

First up, a study with an instant dopamine hit of a title: “Extreme armour in the world’s oldest ankylosaur.” Then, stories about two very different marine creatures that nonetheless share a penchant for brilliant outfits and toxic lifestyles; a baby picture that requires a 430-light-year zoom-in; and lastly, we must once again salute the Sun in all its roiling glory. Enjoy the peer-reviewed eye-candy!

Ankylosaurs: Swole from the start

Maidment, Susannah et al. “Extreme armour in the world’s oldest ankylosaur.” Nature.

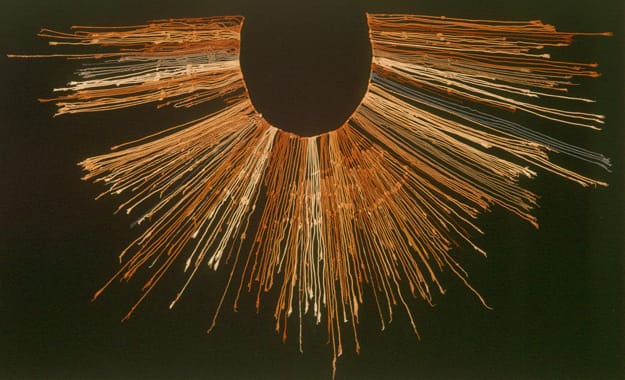

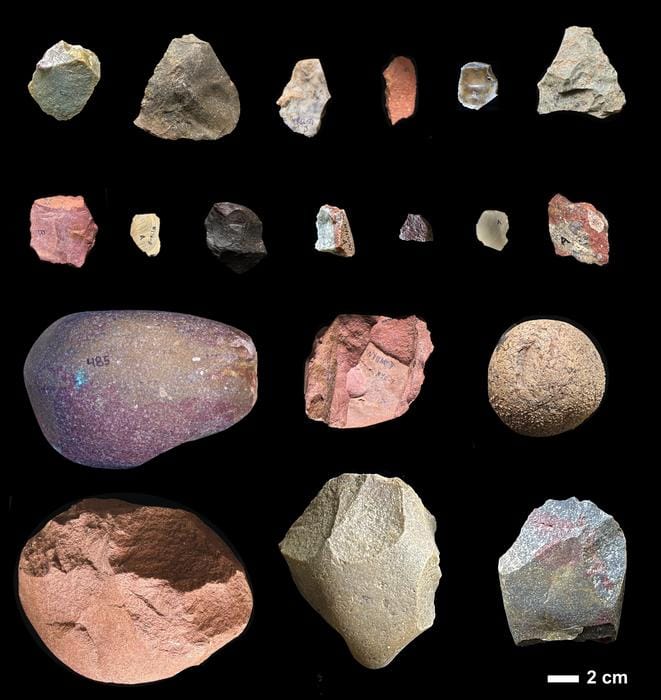

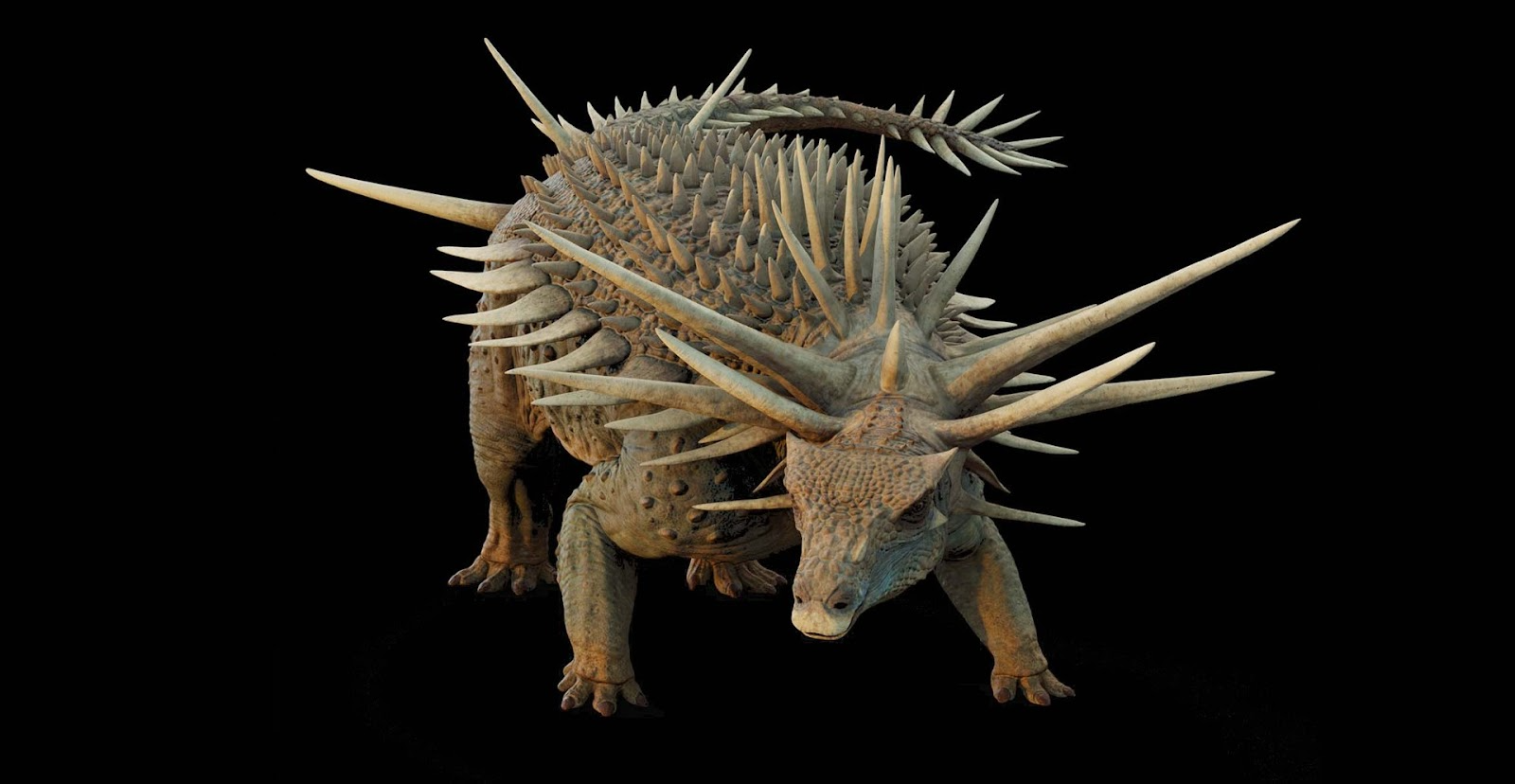

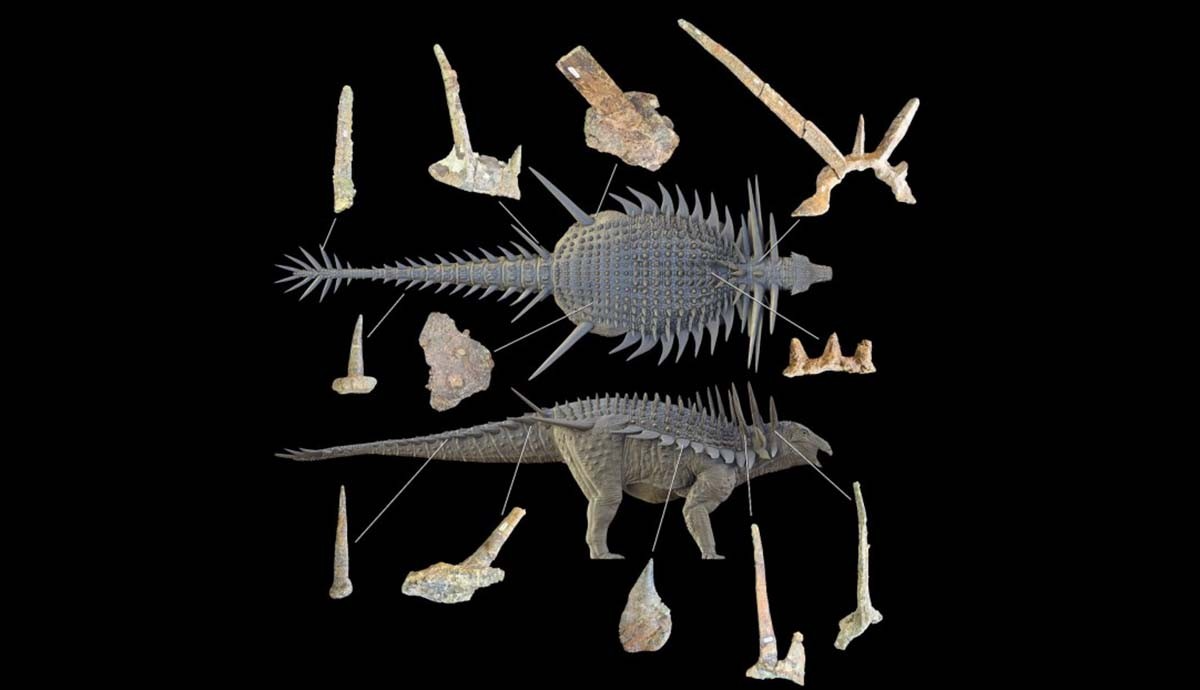

Paleontologists have discovered an ankylosaur that is epic even by the high standards set by this family of giant walking tanks. Partial remains of Spicomellus—the oldest known ankylosaur, dating back 165 million years—reveal that the dinosaur had much more elaborate body armor than later generations, including a collar of bony spikes up to three feet long, and fused tail vertebrae indicating an early tail weapon.

Ankylosaurs are known for their short-limbed frames, clubbed tail weapons, and thick-plated body armor that puts Batman to shame. These dinosaurs, which could reach 30 feet from beak to club, are mostly known from Late Cretaceous fossils. As a consequence “their early evolution in the Early–Middle Jurassic is shrouded in mystery due to a poor fossil record” and “the evolution of their unusual body plan is effectively undocumented,” according to a new study.

In October 2022, a local farmer in the Moroccan badlands discovered a partial skeleton that fills in this tantalizing gap. The fossils suggest that the plates, spikes, and weaponized tails were features of ankylosaurian anatomy from the Jurassic jump.

“The new specimen reveals extreme dermal armour modifications unlike those of any other vertebrate, extinct or extant,” said researchers led by Susannah Maidment of the National History Museum in London. “Given that Spicomellus is an early-diverging ankylosaur or ankylosaurid, this raises the possibility that ankylosaurs acquired this extravagant armour early in their evolutionary history, and this was reduced to a simpler arrangement in later forms.”

As you can see, this early ankylosaur was the living embodiment of the phrase “try me.” Two huge spikes, one of which is almost entirely preserved, flanked the “cervical half-ring” on the animal's neck. The fossils are so visually astonishing that at first glance, they almost look like an arsenal of spears, axes, and clubs from an ancient army.

The team doesn’t hide their amazement at the find, writing that “no known ankylosaur possesses any condition close to the extremely long pairs of spines on the cervical half-ring” and note that the fossils overturn “current understanding of tail club evolution in ankylosaurs, as these structures were previously thought to have evolved only in the Early Cretaceous.”

This incredible armor may have initially evolved as a sexual display that was adapted for defensive purposes by the rise of “multitonne predators” like T. rex. That might explain why the ornaments seemed to have simplified over time. Whatever the reason, the fossils demonstrate that ankylosaurs, as a lineage, were born ready for a fight.

In other news…

Now you sea(horse) me

We’ll move now from the extremely epic to the extremely twee. Pygmy seahorses, which measure no more than an inch, mimic the brightly-colored and venomous gorgonian corals that they symbiotically inhabit. Scientists have now discovered that these tiny animals achieved their extraordinary camouflage in part by discarding a host of genes involved in growth and immune response, perhaps because their protective coral habitats rendered those traits obsolete.

“We analyzed the tiny seahorse’s genome revealing the genomic bases of several adaptations to their mutualistic life,” said researchers led by Meng Qu of the South China Sea Institute of Oceanology, Chinese Academy of Sciences. The analysis suggests “that the protective function of corals may have permitted the pygmy seahorse to lose an exceptionally large number of immune genes.”

Living in a toxic environment can have its benefits, if you’re a seahorse. And that is the perfect segue to the next story…

When life hands you arsenic, make lemon-colored skin

After a long day, isn’t it nice to sink into a scalding bath of arsenic and hydrogen sulfide? That’s the self-care routine for Paralvinella hessleri, a deep sea worm that “is the only animal that colonizes the hottest part of deep-sea hydrothermal vents in the west pacific,” according to a new study.

So, how are these weirdos surviving what should be lethally toxic waters that exceed temperatures of 120°F? The answer is a "distinctive strategy” of “fighting poison with poison,” said researchers led by Hao Wang of the Center of Deep-Sea Research, Chinese Academy of Sciences. The worm stores the arsenic in its skin cells and mixes it with the sulfide to make a dazzling mineral, called orpiment, that provides its bright yellow hue.

“This process represents a remarkable adaptation to extreme chemical environments,” the researchers said. “The yellow granules observed within P. hessleri’s epithelial cells, which are the site of arsenic detoxification, appear to be the key to this adaptation.”

My own hypothesis is that this worm offers an example of convergent evolution with Freddie Mercury’s yellow jacket from Queen’s legendary 1986 Wembley Stadium performance.

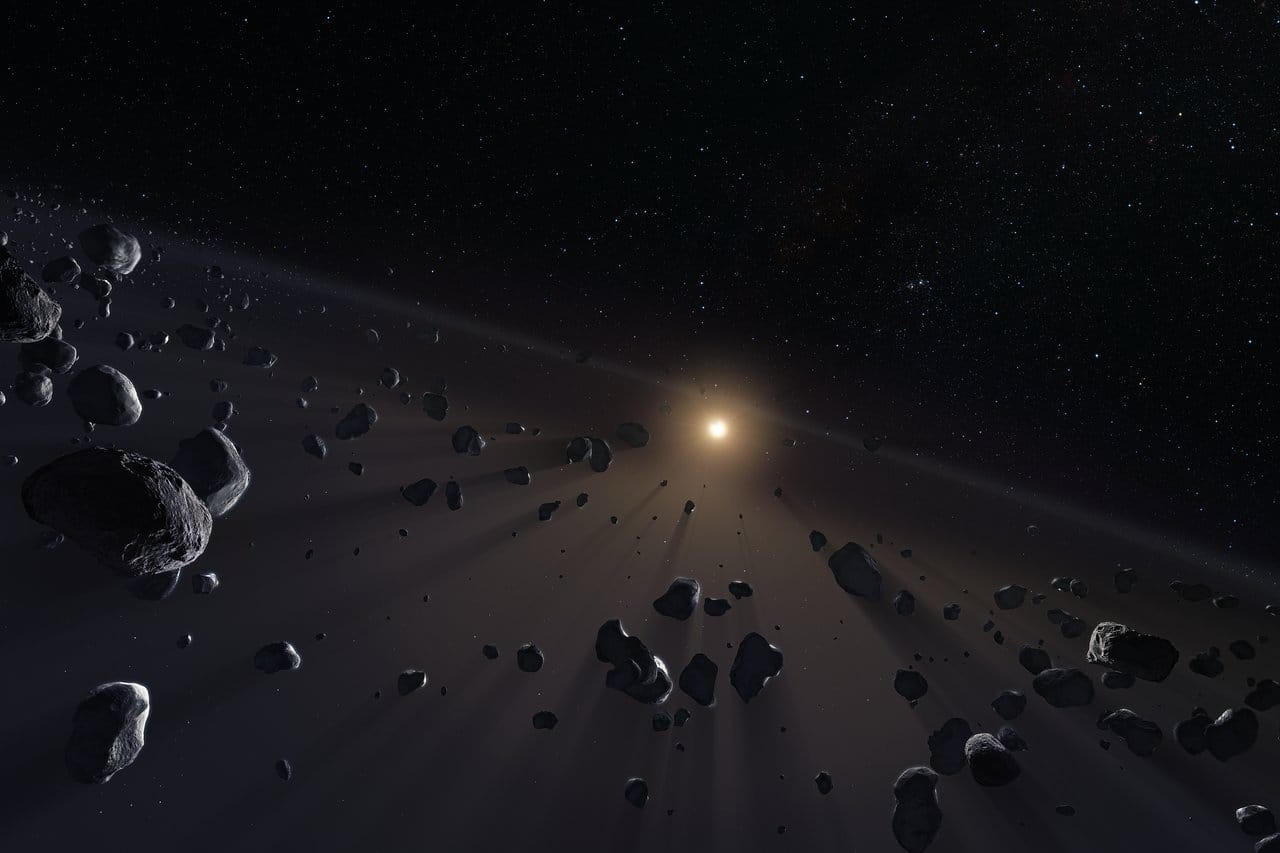

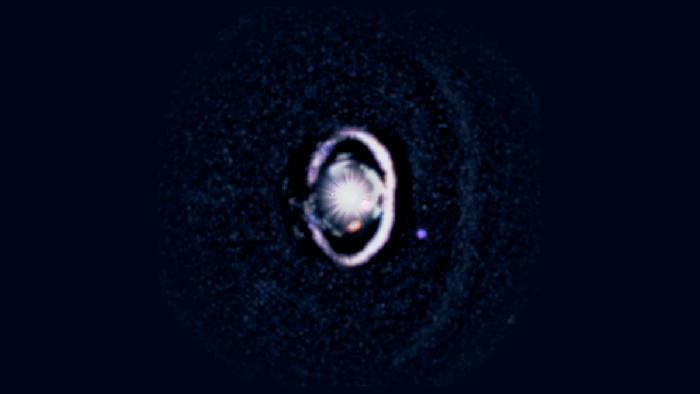

Mind the protoplanetary gap

Your baby photos are cute and all, but it’s going to be hard to top the pic that astronomers just snapped of a newborn planet 430 light years from Earth. This image marks the first time that a planet has been spotted forming within a protoplanetary disk, which is the dusty gassy material from which new worlds are born.

Our “images of 2025 April 13 and April 16 discovered an accreting protoplanet,” said researchers led by Laird Close of the University of Arizona. “The ‘protoplanet’ called WISPIT 2b “appears to be clearing a dust-free gap between the two bright rings of dust—as long predicted by theory.”

If Earth is the pale blue dot, then WISPIT 2b is the funky purple blob. Though stray baby planets have been imaged before in the cavity between their host stars and the young disks, this amazing image offers the first glimpse of the most common mode of planetary formation, which occurs inside the dusty maelstrom.

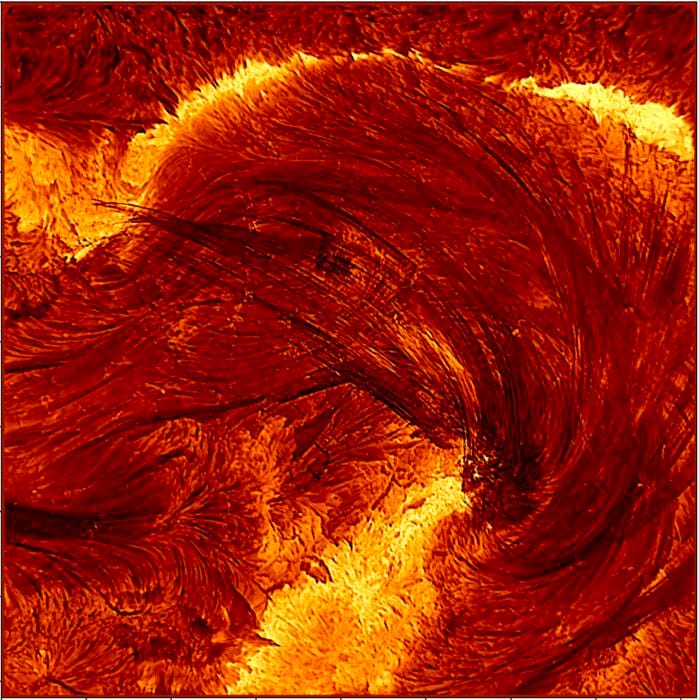

Welcome to the Arcade of Coronal Loops

We’ll close with yet another cosmic photoshoot—this time of everyone’s favorite star, the Sun. from the Daniel K Inouye Solar Telescope (DKIST) in Hawaii. The telescope captured unprecedented pictures of a decaying solar flare at a key hydrogen-alpha (Hα) wavelength of 656.28 nanometers.