A Lost Planet Created the Moon. Now, We Know Where It Came From.

Welcome back to the Abstract! Here are the studies this week that overthrew the regime, survived outer space, smashed planets, and crafted an ancient mystery from clay.

First, a queen gets sprayed with acid—and that’s not even the most horrifying part of the story. Then: a moss garden that is out of this world, the big boom that made the Moon, and a breakthrough in the history of goose-human relations.

As always, for more of my work, check out my book First Contact: The Story of Our Obsession with Aliens, or subscribe to my personal newsletter the BeX Files.

What is this, a regime change for ants?

Every so often, a study opens with such a forceful hook that it is simply best for me to stand aside and allow it to speak for itself. Thus:

“Matricide—the killing of a mother by her own genetic offspring—is rarely observed in nature, but not unheard-of. Among animal species in which offspring remain with their mothers, the benefits gained from maternal care are so substantial that eliminating the mother almost never pays, making matricide vastly rarer than infanticide.”

“Here, we report matricidal behavior in two ant species, Lasius flavus and Lasius japonicus, where workers kill resident queens (their mothers) after the latter have been sprayed with abdominal fluid by parasitic ant queens of the ants Lasius orientalis and Lasius umbratus.”

Mad props to this team for condensing an entire etymological epic into three sentences. Such murderous acts of dynastic usurpation were first observed by Taku Shimada, an ant enthusiast who runs a blog called Ant Room. Though matricide is sometimes part of a life cycle—like mommy spiders sacrificing their bodies for consumption by their offspring—there is no clear precedent for the newly-reported form of matricide, in which neither the young nor mother benefits from an evolutionary point of view.

In what reads like an unfolding horror, the invading parasitic queens “covertly approach the resident queen and spray multiple jets of abdominal fluid at her”—formic acid, as it turns out—that then “elicits abrupt attacks by host workers, which ultimately kill their own mother,” report Shimada and his colleagues.

“The parasitic queens are then accepted, receive care from the orphaned host workers and produce their own brood to found a new colony,” the team said. “Our findings are the first to document a novel host manipulation that prompts offspring to kill an otherwise indispensable mother.”

My blood is curdling and yet I cannot look away! Though this strategy is uniquely nightmarish, it is not uncommon for invading parasitic ants to execute queens in any number of creative ways. The parasites are just usually a bit more hands-on (or rather, tarsus-on) about the process.

“Queen-killing” has “evolved independently on multiple occasions across [ant species], indicating repeated evolutionary gains,” Shimada’s team said. “Until now, the only mechanistically documented solution was direct assault: the parasite throttles or beheads the host queen, a tactic that has arisen convergently in several lineages.”

When will we get an ant Shakespeare?! Someone needs to step up and claim that title, because these queens blow Lady MacBeth out of the water.

In other news…

That’s one small stem for a plant, one giant leaf for plant-kind

Scientists simply love to expose extremophile life to the vacuum of space to, you know, see how well they do out there. In a new addition to this tradition, a study reports that spores from the moss Physcomitrium patens survived a full 283 days chilling on the outside of the International Space Station, which is generally not the side of an orbital habitat you want to be stuck on.

Even wilder, most of the spacefaring spores were reproductively successful upon their return to Earth. “Remarkably, even after 9 months of exposure to space conditions, over 80% of the encased spores germinated upon return to Earth,” said researchers led by Chang-hyun Maeng of Hokkaido University. “To the best of our knowledge, this is the first report demonstrating the survival of bryophytes”—the family to which mosses belong—”following exposure to space and subsequent return to the ground.”

Congratulations to these mosses for boldly growing where no moss has grown before.

Hints of a real-life ghost world

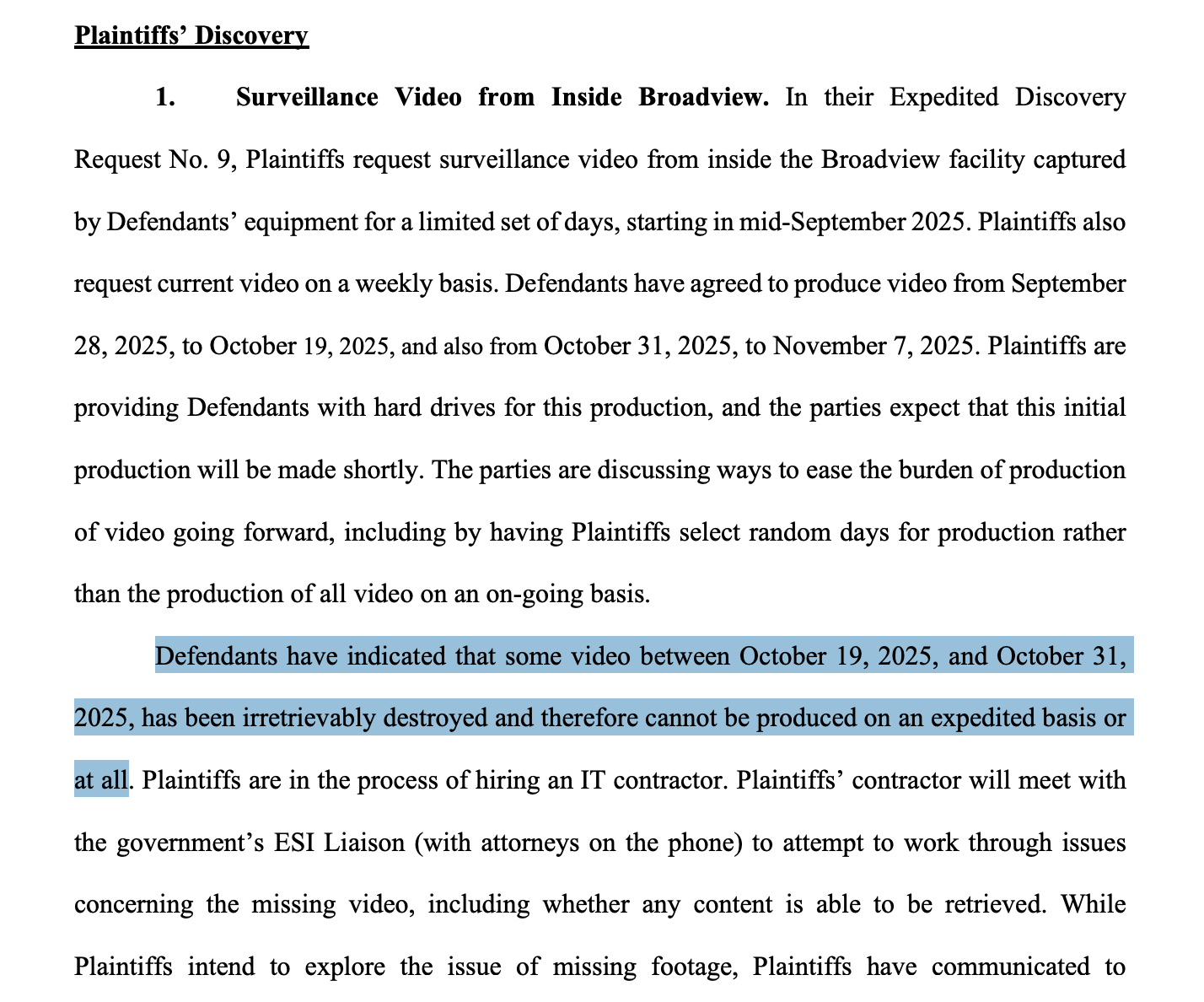

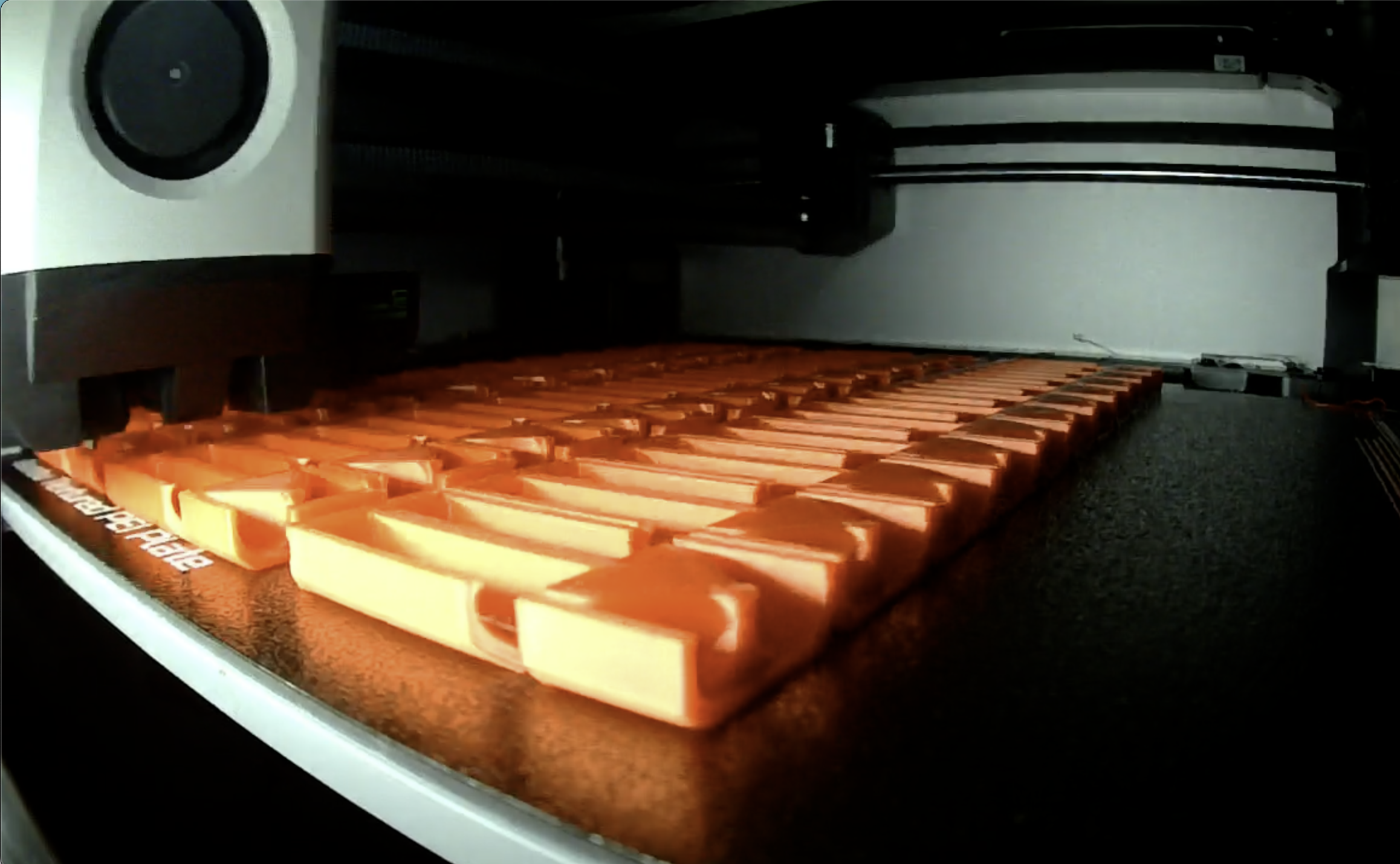

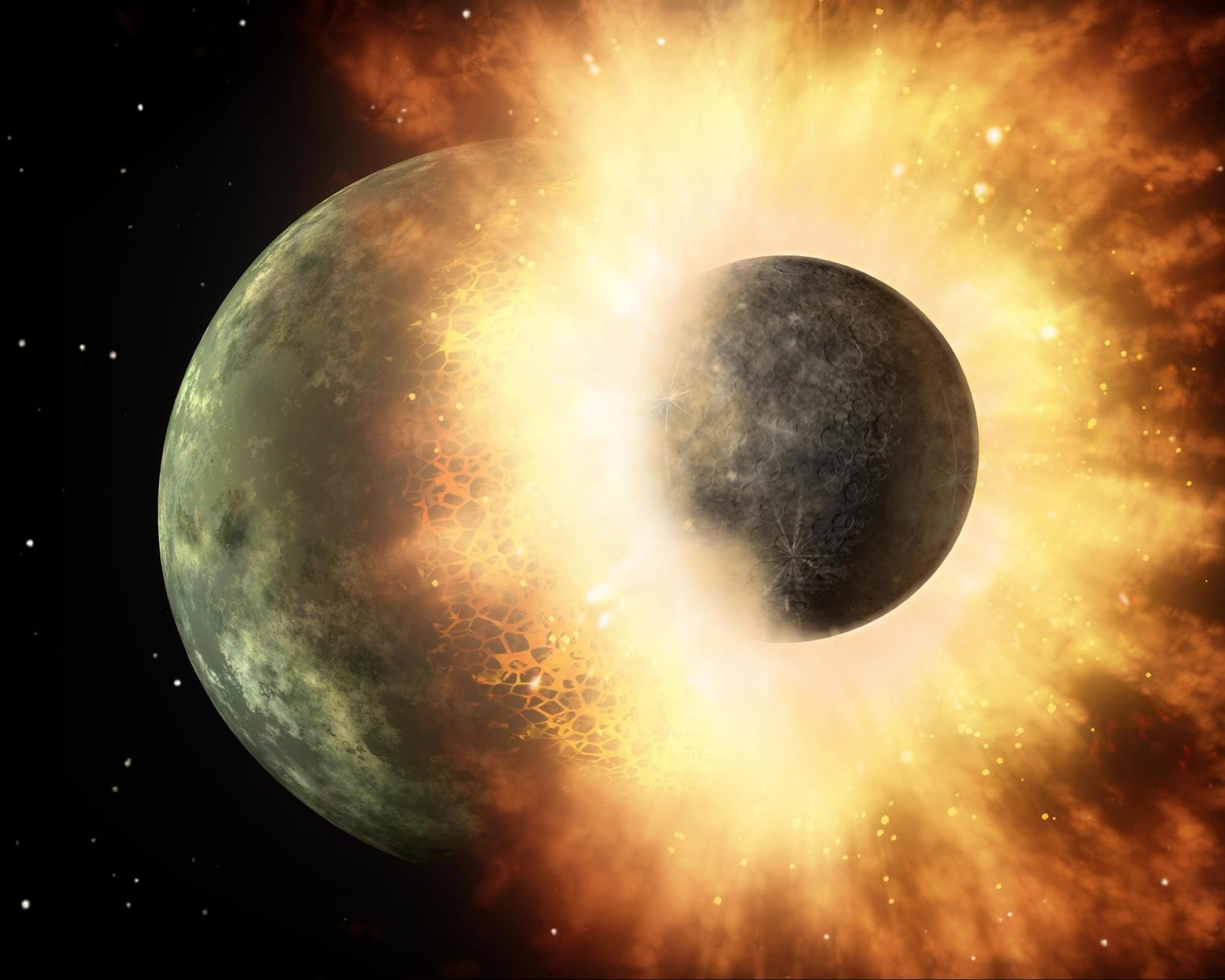

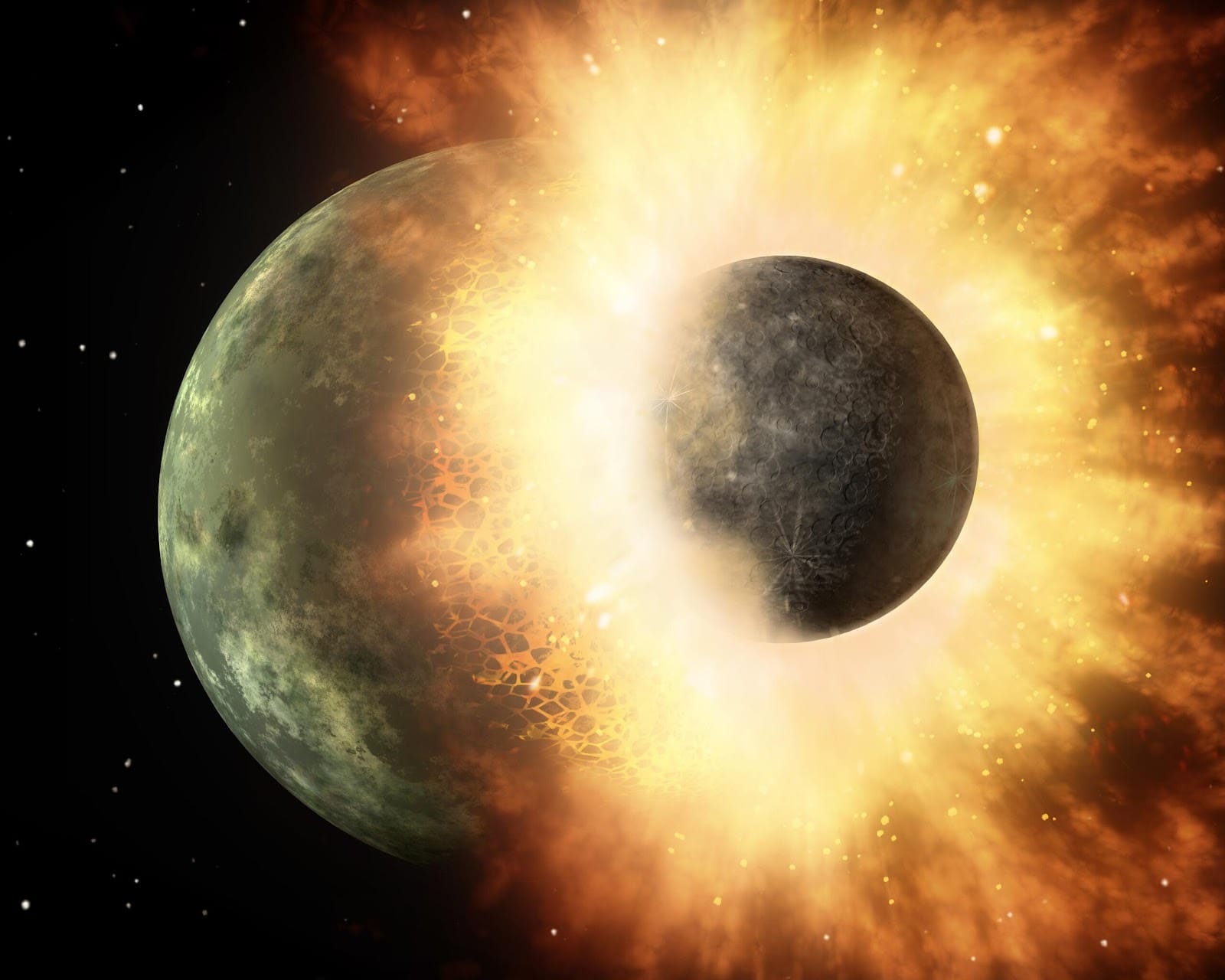

Hopp, Timo et al. “The Moon-forming impactor Theia originated from the inner Solar System.” Science.

Earth had barely been born before a Mars-sized planet, known as Theia, smashed into it some 4.5 billion years ago. The debris from the collision coalesced into what is now our Moon, which has played a key role in Earth’s habitability, so we owe our lives in part to this primordial punch-up.

Scientists have now revealed new details about Theia by measuring the chemical makeup of “lunar samples, terrestrial rocks, and meteorites…from which Theia and proto-Earth might have formed,” according to a new study. They conclude that Theia likely originated in the inner solar system based on the chemical signatures that this shattered world left behind on the Moon and Earth.

“We found that all of Theia and most of Earth’s other constituent materials originated from the inner Solar System,” said researchers led by Timo Hopp of The University of Chicago and the Max Planck Institute for Solar System Research. “Our calculations suggest that Theia might have formed closer to the Sun than Earth did.”

Wherever its actual birthplace, what remains of Theia is buried on the Moon and as giant undigested slabs inside Earth’s mantle. Rest in pieces, sister.

Goosebumps of yore

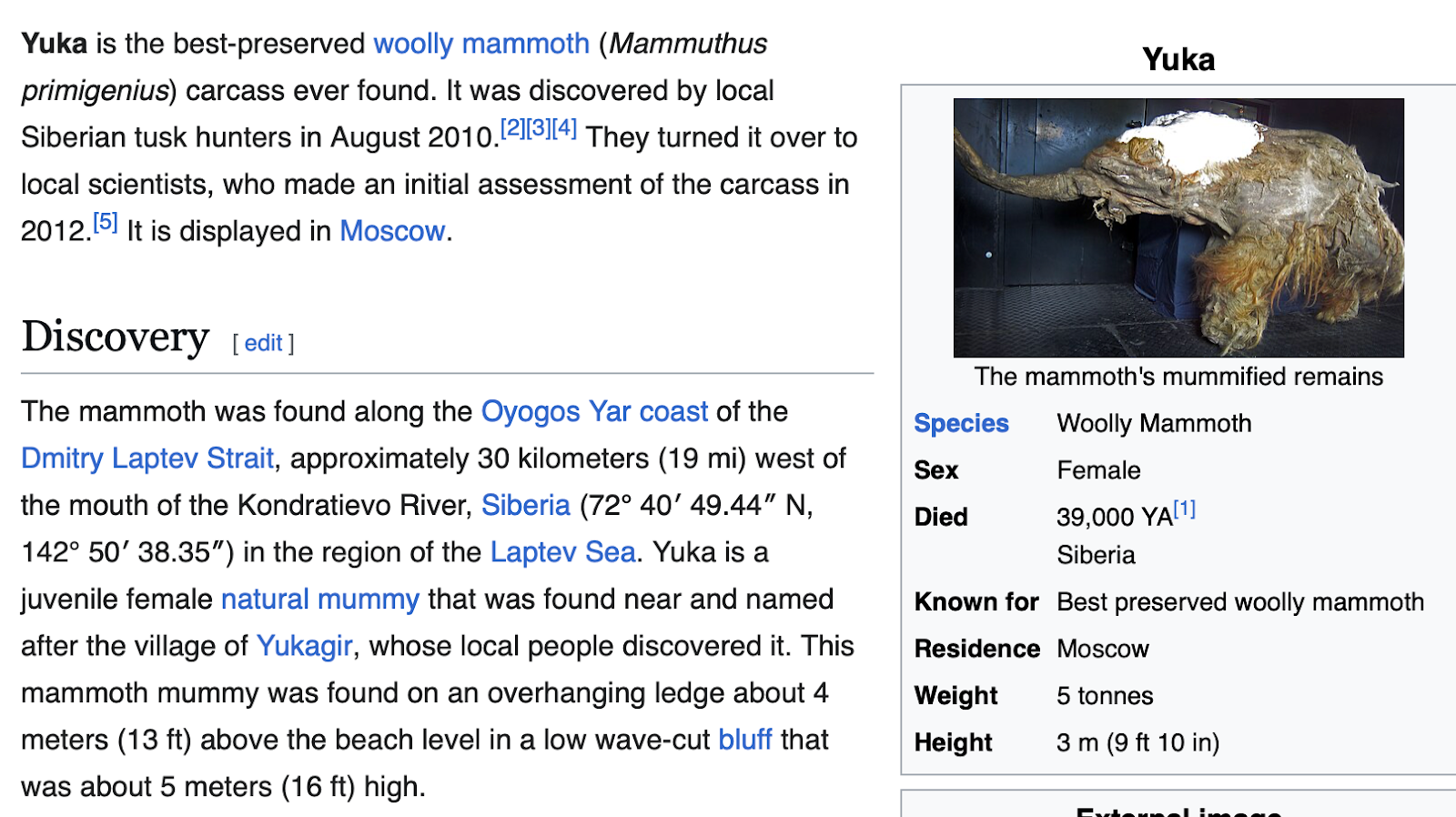

You’ve heard of the albatross around your neck, but what about the goose on your back? A new study reports the discovery of a 12,000-year-old artifact in Israel that is the “earliest known figurine to depict a human–animal interaction” with its vision of a goose mysteriously draped over a woman’s spine and shoulders.

The tiny, inch-high figurine was recovered from a settlement built by the prehistoric Natufian culture and it may represent some kind of sex thing.

“We…suggest that by modeling a goose in this specific posture, the Natufian manufacturer intended to portray the trademark pattern of the gander’s mating behavior,” said researchers led by Laurent Davin of the Hebrew University of Jerusalem. “This kind of imagined mating between humans and animal spirits is typical of an animistic perspective, documented in cross-cultural archaeological and ethnographic records in specific situations” such as an “erotic dream” or “shamanistic vision.”

First, the bizarre Greek myth of Leda and the Swan, and now this? What is it about ancient cultures and weird waterfowl fantasies? In any case, my own interpretation is that the goose was just tired and needed a piggyback (or gaggle-back).

Thanks for reading! See you next week.