Steam Bends to Payment Processors on Porn Games

Steam, the dominant digital storefront for PC games operated by Valve, updated its guidelines to forbid “certain kinds of adult content” and blamed restrictions from payment processors and financial institutions. The update was initially spotted by SteamDB.info , a platform that tracks and publishes data about Steam, and reported by the Japanese gaming site Gamespark.

The update is yet another signal that payment processors are lately becoming more vigilant about what online platforms that host adult content they’ll provide services to and another clear sign that they are currently the ultimate arbiter of what kind of content can be made easily available online, or not.

Steam’s policy change appears under the onboarding portion of its Steamworks documentation for developers and publishers. The 15th item on a list of “what you shouldn’t publish on Steam” now reads: “Content that may violate the rules and standards set forth by Steam’s payment processors and related card networks and banks, or internet network providers. In particular, certain kinds of adult only content.”

It’s not clear when exactly Valve updated this list, but an archive of this page from April shows that it only had 14 items then. Other items that were already on the list included “nude or sexually explicit images of real people” and “adult content that isn’t appropriately labeled and age-gated,” but Valve did not previously mention payment processors specifically.

"We were recently notified that certain games on Steam may violate the rules and standards set forth by our payment processors and their related card networks and banks," Valve spokesperson Kaci Aitchison Boyle told me in an email. "As a result, we are retiring those games from being sold on the Steam Store, because loss of payment methods would prevent customers from being able to purchase other titles and game content on Steam. We are directly notifying developers of these games, and issuing app credits should they have another game they’d like to distribute on Steam in the future."

Valve did not respond to questions about where developers might find more details about payment processors’ rules and standards.

SteamDB.info, which also tracks when games are added or removed from Steam, noted many adult games have been removed from Steam in the last 24 hours. Sex games, many of which are of very low quality and sometimes include very extreme content, have been common on Steam for years. In April, I wrote about a “rape and incest” game called No Mercy which the developers eventually voluntarily removed from Steam after pressure from users, media, and lawmakers in the UK. The majority of games I saw that were removed from Steam recently revolve around similar themes, but we don’t know if they were removed by the developers or Valve, and if they were removed by Valve because of the recent policy change. Games are removed from Steam every day for a variety of reasons, including expired licensing deals or developers no longer wanting to support a game.

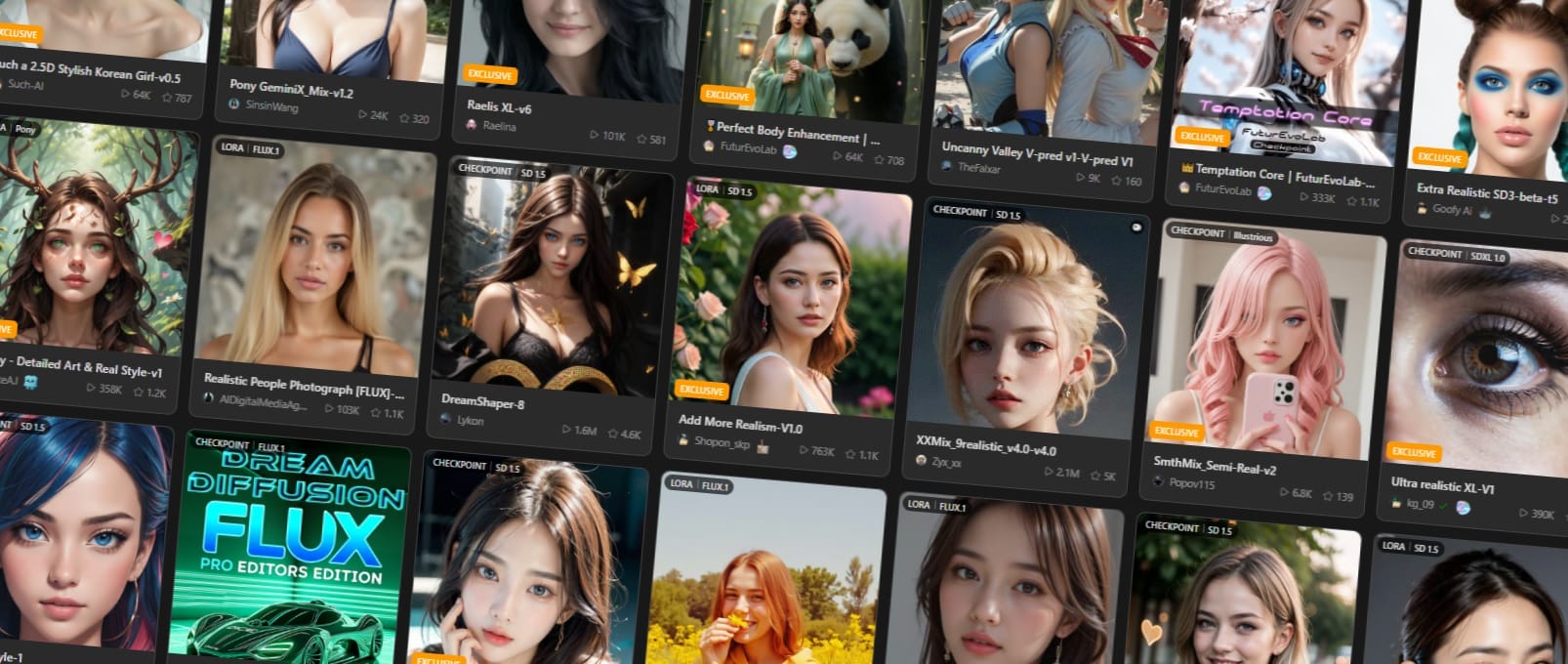

However, Steam’s policy change comes at a time that we’ve seen increased pressure from payment processors around adult content. We recently reported that payment processors have forced two major AI models sharing platforms, Civitai and Tensor.Art, to remove certain adult content.

Update: This story has been updated with comment from Valve.